2024-02-21 ペンシルベニア州立大学(PennState)

<関連情報>

- https://www.psu.edu/news/information-sciences-and-technology/story/improving-efficiency-reliability-ai-medical-summarization/

- https://aclanthology.org/2023.emnlp-main.673/

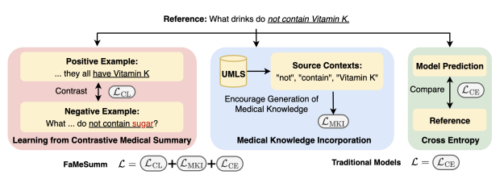

FaMeSumm 医学的要約の忠実性の調査と改善 FaMeSumm: Investigating and Improving Faithfulness of Medical Summarization

Nan Zhang, Yusen Zhang, Wu Guo, Prasenjit Mitra, Rui Zhang

Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing

DOI:https://doi.org/10.18653/v1/2023.emnlp-main.673

Abstract

Summaries of medical text shall be faithful by being consistent and factual with source inputs, which is an important but understudied topic for safety and efficiency in healthcare. In this paper, we investigate and improve faithfulness in summarization on a broad range of medical summarization tasks. Our investigation reveals that current summarization models often produce unfaithful outputs for medical input text. We then introduce FaMeSumm, a framework to improve faithfulness by fine-tuning pre-trained language models based on medical knowledge. FaMeSumm performs contrastive learning on designed sets of faithful and unfaithful summaries, and it incorporates medical terms and their contexts to encourage faithful generation of medical terms. We conduct comprehensive experiments on three datasets in two languages: health question and radiology report summarization datasets in English, and a patient-doctor dialogue dataset in Chinese. Results demonstrate that FaMeSumm is flexible and effective by delivering consistent improvements over mainstream language models such as BART, T5, mT5, and PEGASUS, yielding state-of-the-art performances on metrics for faithfulness and general quality. Human evaluation by doctors also shows that FaMeSumm generates more faithful outputs. Our code is available at https://github.com/psunlpgroup/FaMeSumm.