2025-09-04 マウントサイナイ医療システム(MSHS)

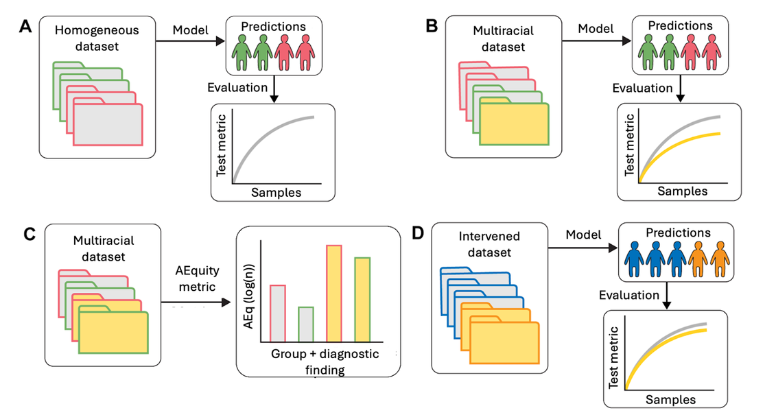

AEquity workflow to identify and mitigate biases in a chest x-ray dataset. Credit: Gulamali, et al., Journal of Medical Internet Research

<関連情報>

- https://www.mountsinai.org/about/newsroom/2025/new-ai-tool-addresses-accuracy-and-fairness-in-data-to-improve-health-algorithms

- https://www.jmir.org/2025/1/e71757

サブグループ学習可能性を用いた医療データセットにおける暗黙的・明示的人種的バイアスの検出、特性評価、軽減:アルゴリズム開発と検証研究 Detecting, Characterizing, and Mitigating Implicit and Explicit Racial Biases in Health Care Datasets With Subgroup Learnability: Algorithm Development and Validation Study

Faris Gulamali; Ashwin Shreekant Sawant; Lora Liharska; Carol Horowitz; Lili Chan; Ira Hofer; Karandeep Singh; Lynne Richardson; Emmanuel Mensah; Alexander Charney; David Reich; Jianying Hu; Girish Nadkarni

Journal of Medical Internet Research Published: January 25, 2025

DOI:https://doi.org/10.2196/71757

Abstract

Background:The growing adoption of diagnostic and prognostic algorithms in health care has led to concerns about the perpetuation of algorithmic bias against disadvantaged groups of individuals. Deep learning methods to detect and mitigate bias have revolved around modifying models, optimization strategies, and threshold calibration with varying levels of success and tradeoffs. However, there have been limited substantive efforts to address bias at the level of the data used to generate algorithms in health care datasets.

Objective:The aim of this study is to create a simple metric (AEquity) that uses a learning curve approximation to distinguish and mitigate bias via guided dataset collection or relabeling.

Methods:We demonstrate this metric in 2 well-known examples, chest X-rays and health care cost utilization, and detect novel biases in the National Health and Nutrition Examination Survey.

Results:We demonstrated that using AEquity to guide data-centric collection for each diagnostic finding in the chest radiograph dataset decreased bias by between 29% and 96.5% when measured by differences in area under the curve. Next, we wanted to examine (1) whether AEquity worked on intersectional populations and (2) if AEquity is invariant to different types of fairness metrics, not just area under the curve. Subsequently, we examined the effect of AEquity on mitigating bias when measured by false negative rate, precision, and false discovery rate for Black patients on Medicaid. When we examined Black patients on Medicaid, at the intersection of race and socioeconomic status, we found that AEquity-based interventions reduced bias across a number of different fairness metrics including overall false negative rate by 33.3% (bias reduction absolute=1.88×10-1, 95% CI 1.4×10-1 to 2.5×10-1; bias reduction of 33.3%, 95% CI 26.6%‐40%; precision bias by 7.50×10-2, 95% CI 7.48×10-2 to 7.51×10-2; bias reduction of 94.6%, 95% CI 94.5%‐94.7%; false discovery rate by 94.5%; absolute bias reduction=3.50×10-2, 95% CI 3.49×10-2 to 3.50×10-2). Similarly, AEquity-guided data collection demonstrated bias reduction of up to 80% on mortality prediction with the National Health and Nutrition Examination Survey (bias reduction absolute=0.08, 95% CI 0.07-0.09). Then, we wanted to compare AEquity to state-of-the-art data-guided debiasing measures such as balanced empirical risk minimization and calibration. Consequently, we benchmarked against balanced empirical risk minimization and calibration and showed that AEquity-guided data collection outperforms both standard approaches. Moreover, we demonstrated that AEquity works on fully connected networks; convolutional neural networks such as ResNet-50; transformer architectures such as VIT-B-16, a vision transformer with 86 million parameters; and nonparametric methods such as Light Gradient-Boosting Machine.

Conclusions:In short, we demonstrated that AEquity is a robust tool by applying it to different datasets, algorithms, and intersectional analyses and measuring its effectiveness with respect to a range of traditional fairness metrics.