EPFLの研究者は、タンパク質言語モデルを用いて、天然配列と同等の性質を持つタンパク質配列を生成する新しい技術を開発しました。この手法は、従来のモデルを凌駕するものであり、タンパク質設計の有望な可能性を示しています。 EPFL researchers have developed a new technique that uses a protein language model for generating protein sequences with comparable properties to natural sequences. The method outperforms traditional models and offers promising potential for protein design.

2023-03-09 スイス連邦工科大学ローザンヌ校(EPFL)

研究チームは、深層学習ニューラルネットワーク「MSA Transformer」を用いて、特定の構造と機能を持つ新しいタンパク質を設計するための有望な解決策を見つけたと報告しています。

この研究は、eLifeに掲載され、タンパク質配列の品質、新規性、多様性に関する堅実なベンチマークを提供しています。

<関連情報>

- https://actu.epfl.ch/news/a-new-tool-for-protein-sequence-generation-and-des/

- https://elifesciences.org/articles/79854

複数配列のアラインメントで学習させたタンパク質言語モデルの生成力 Generative power of a protein language model trained on multiple sequence alignments

Damiano Sgarbossa,Umberto Lupo,Anne-Florence Bitbol

eLife Published:Feb 3, 2023

DOI:https://doi.org/10.7554/eLife.79854

Abstract

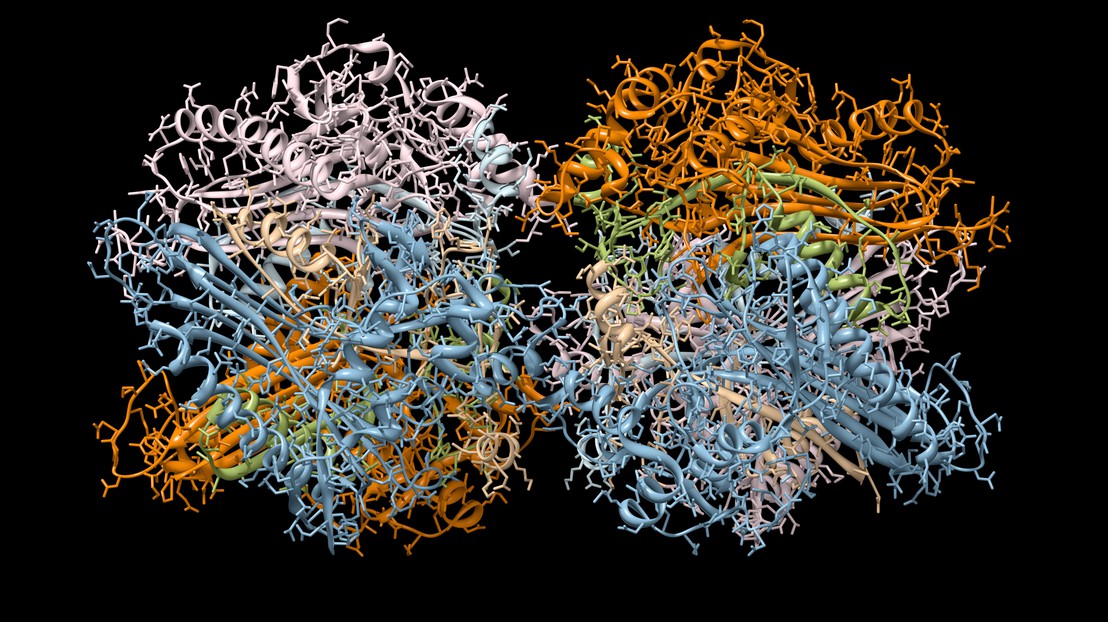

Computational models starting from large ensembles of evolutionarily related protein sequences capture a representation of protein families and learn constraints associated to protein structure and function. They thus open the possibility for generating novel sequences belonging to protein families. Protein language models trained on multiple sequence alignments, such as MSA Transformer, are highly attractive candidates to this end. We propose and test an iterative method that directly employs the masked language modeling objective to generate sequences using MSA Transformer. We demonstrate that the resulting sequences score as well as natural sequences, for homology, coevolution and structure-based measures. For large protein families, our synthetic sequences have similar or better properties compared to sequences generated by Potts models, including experimentally-validated ones. Moreover, for small protein families, our generation method based on MSA Transformer outperforms Potts models. Our method also more accurately reproduces the higher-order statistics and the distribution of sequences in sequence space of natural data than Potts models. MSA Transformer is thus a strong candidate for protein sequence generation and protein design.