2025-03-17 マウントサイナイ医療システム(MSHS)

<関連情報>

- https://www.mountsinai.org/about/newsroom/2025/new-ai-model-analyzes-full-night-of-sleep-with-high-accuracy-in-largest-study-of-its-kind

- https://academic.oup.com/sleep/advance-article-abstract/doi/10.1093/sleep/zsaf061/8075113

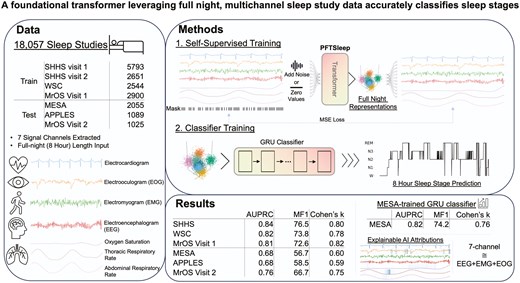

一晩中、多チャンネルの睡眠検査データを活用した基礎変換器が、睡眠段階を正確に分類する A foundational transformer leveraging full night, multichannel sleep study data accurately classifies sleep stages

Benjamin Fox, Joy Jiang, Sajila Wickramaratne, Patricia Kovatch, Mayte Suarez-Farinas, Neomi A Shah, Ankit Parekh, Girish N Nadkarni

Sleep Published:13 March 2025

DOI:https://doi.org/10.1093/sleep/zsaf061

Graphical Abstract

Abstract

Study Objectives

To evaluate whether a foundational transformer using 8-hour, multichannel polysomnogram (PSG) data can effectively encode signals and classify sleep stages with state-of-the-art performance.

Methods

The Sleep Heart Health Study, Wisconsin Sleep Cohort, and Osteoporotic Fractures in Men (MrOS) Study Visit 1 were used for training, and the Multi-Ethnic Study of Atherosclerosis (MESA), Apnea Positive Pressure Long-term Efficacy Study (APPLES), and MrOS visit 2 served as independent test sets. We developed PFTSleep, a self-supervised foundational transformer that encodes full night sleep studies with brain, movement, cardiac, oxygen, and respiratory channels. These representations were used to train another model to classify sleep stages. We compared our results to existing methods, examined differences in performance by varying channel input data and training dataset size, and investigated an AI explainability tool to analyze decision processes.

Results

PFTSleep was trained with 13,888 sleep studies and tested on 4,169 independent studies. Cohen’s Kappa scores were 0.81 for our held-out set, 0.59 for APPLES, 0.60 for MESA, and 0.75 for MrOS Visit 2. Performance increases to 0.76 on a held-out MESA set when MESA is included in the training of the classifier head but not the transformer. Compared to other state-of-the-art AI models, our model shows high performance across diverse datasets while only using task agnostic PSG representations from a foundational transformer as input for sleep stage classification.

Conclusions

Full night, multichannel PSG representations from a foundational transformer enable accurate sleep stage classification comparable to state-of-the-art AI methods across diverse datasets.