2025-10-16 タフツ大学

<関連情報>

- https://now.tufts.edu/2025/10/16/flight-simulator-brain-reveals-how-we-learn-and-why-minds-sometimes-go-course

- https://www.nature.com/articles/s41467-025-63994-y

- https://www.nature.com/articles/s41467-025-63995-x

階層的意思決定における不確実性処理の神経基盤 The neural basis for uncertainty processing in hierarchical decision making

Mien Brabeeba Wang,Nancy Lynch & Michael M. Halassa

Nature Communications Published:16 October 2025

DOI:https://doi.org/10.1038/s41467-025-63994-y

Abstract

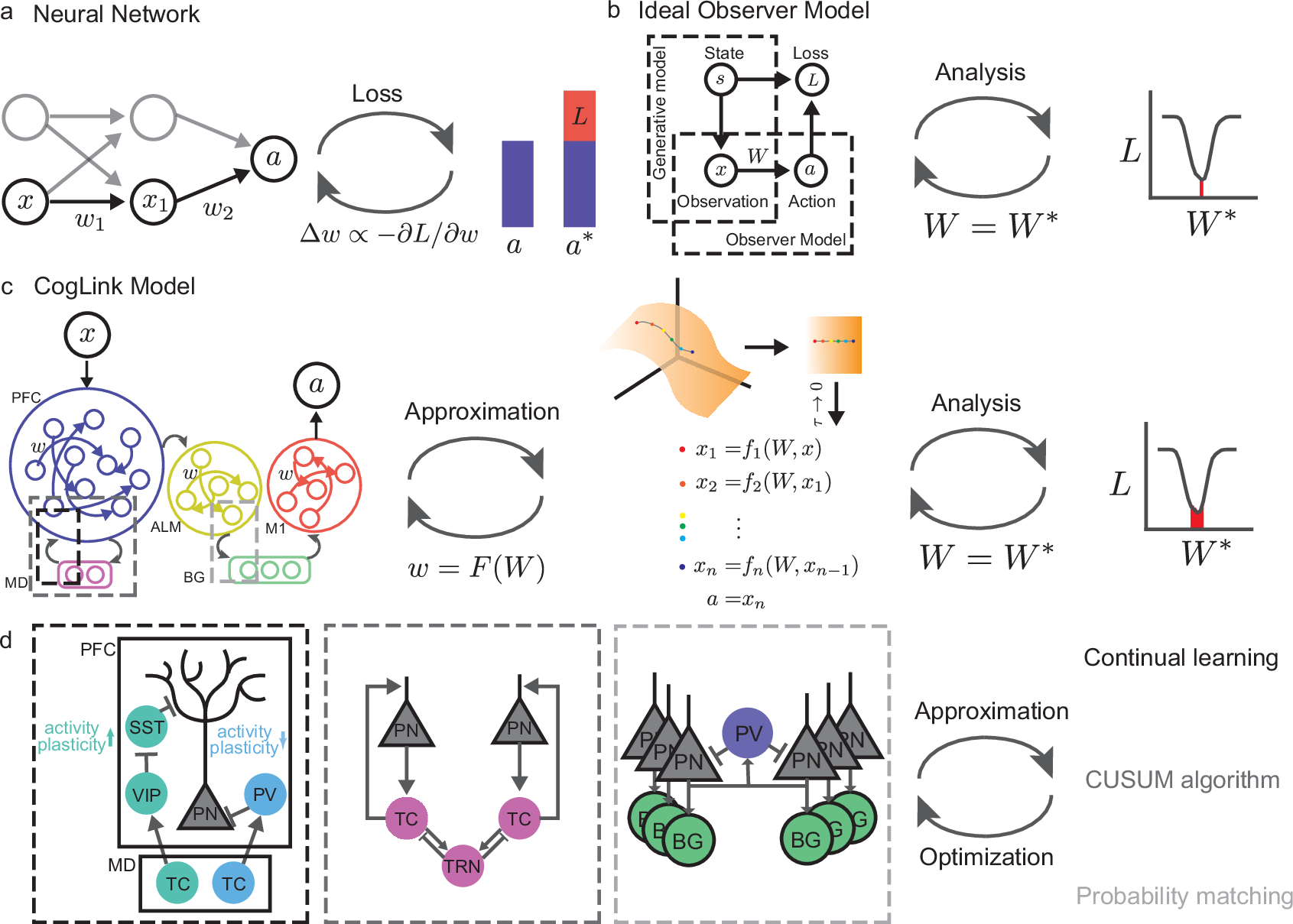

Hierarchical decisions in natural environments require processing uncertainty across multiple levels, but existing models struggle to explain how animals perform flexible, goal-directed behaviors under such conditions. Here we introduce CogLinks, biologically grounded neural architectures that combine corticostriatal circuits for reinforcement learning and frontal thalamocortical networks for executive control. Through mathematical analysis and targeted lesion, we show that these systems specialize in different forms of uncertainty, and their interaction supports hierarchical decisions by regulating efficient exploration, and strategy switching. We apply CogLinks to a computational psychiatry problem, linking neural dysfunction in schizophrenia to atypical reasoning patterns in decision making. Overall, CogLink fills an important gap in the computational landscape, providing a bridge from neural substrates to higher cognition.

前頭前野-線条体ネットワークにおける強化学習戦略の視床による制御 Thalamic regulation of reinforcement learning strategies across prefrontal-striatal networks

Bin A. Wang,Mien Brabeeba Wang,Norman H. Lam,Liu Mengxing,Shumei Li,Ralf D. Wimmer,Pedro M. Paz-Alonso,Michael M. Halassa & Burkhard Pleger

Nature Communications Published:16 October 2025

DOI:https://doi.org/10.1038/s41467-025-63995-x

Abstract

Human decision-making involves model-free and model-based reinforcement learning (RL) strategies, largely implemented by prefrontal-striatal circuits. Combining human brain imaging with neural network modelling in a probabilistic reversal learning task, we identify a unique role for the mediodorsal thalamus (MD) in arbitrating between RL strategies. While both dorsal PFC and the striatum support rule switching, the former does so when subjects predominantly adopt model-based strategy, and the latter model-free. The lateral and medial subdivisions of MD likewise engage these modes, each with distinct PFC connectivity. Notably, prefrontal transthalamic processing increases during the shift from stable rule use to model-based updating, with model-free updates at intermediate levels. Our CogLinks model shows that model-free strategies emerge when prefrontal-thalamic mechanisms for context inference fail, resulting in a slower overwriting of prefrontal strategy representations – a phenomenon we empirically validate with fMRI decoding analysis. These findings reveal how prefrontal transthalamic pathways implement flexible RL-based cognition.