2023-05-19 カリフォルニア大学サンディエゴ校(UCSD)

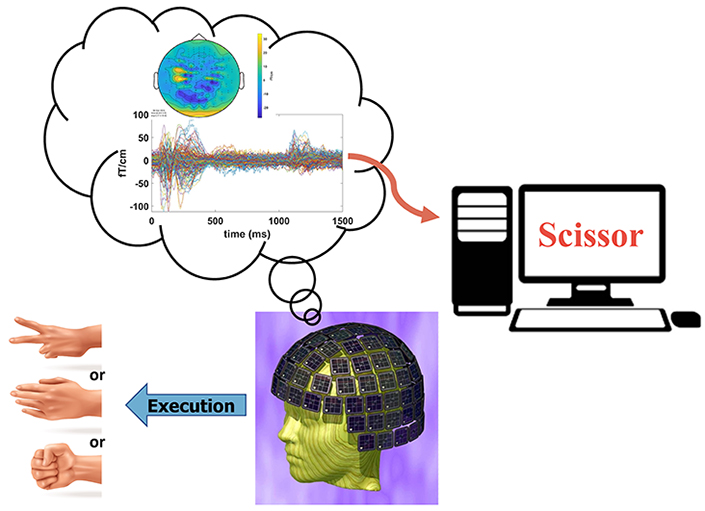

In the study, volunteers were equipped with a MEG helmet and randomly instructed to make one of the gestures used in the game Rock Paper Scissors. A high-performing deep learning model interpreted the MEG data, distinguishing among hand gestures with more than 85% accuracy.

◆研究は非侵襲的な技術で単一の手のジェスチャーを識別する最良の結果を示し、マグネトエンセファログラフィ(MEG)という手法を使用しました。今後の研究では、MEGを利用した脳-コンピューターインターフェースの開発に向けた基盤が築かれるでしょう。

<関連情報>

- https://today.ucsd.edu/story/new-study-shows-noninvasive-brain-imaging-can-distinguish-among-hand-gestures

- https://academic.oup.com/cercor/advance-article-abstract/doi/10.1093/cercor/bhad173/7161766?redirectedFrom=fulltext&login=false

ディープラーニングを用いた脳磁図ベースの手指ジェスチャーデコーディングのためのブレインコンピュータインターフェース Magnetoencephalogram-based brain–computer interface for hand-gesture decoding using deep learning

Yifeng Bu, Deborah L Harrington, Roland R Lee, Qian Shen, Annemarie Angeles-Quinto, Zhengwei Ji, Hayden Hansen, Jaqueline Hernandez-Lucas, Jared Baumgartner, Tao Song,Sharon Nichols, Dewleen Baker, Ramesh Rao, Imanuel Lerman, Tuo Lin, Xin Ming Tu, Mingxiong Huang

Cerebral Cortex Published:13 May 2023

DOI:https://doi.org/10.1093/cercor/bhad173

Abstract

Advancements in deep learning algorithms over the past decade have led to extensive developments in brain–computer interfaces (BCI). A promising imaging modality for BCI is magnetoencephalography (MEG), which is a non-invasive functional imaging technique. The present study developed a MEG sensor-based BCI neural network to decode Rock-Paper-scissors gestures (MEG-RPSnet). Unique preprocessing pipelines in tandem with convolutional neural network deep-learning models accurately classified gestures. On a single-trial basis, we found an average of 85.56% classification accuracy in 12 subjects. Our MEG-RPSnet model outperformed two state-of-the-art neural network architectures for electroencephalogram-based BCI as well as a traditional machine learning method, and demonstrated equivalent and/or better performance than machine learning methods that have employed invasive, electrocorticography-based BCI using the same task. In addition, MEG-RPSnet classification performance using an intra-subject approach outperformed a model that used a cross-subject approach. Remarkably, we also found that when using only central-parietal-occipital regional sensors or occipitotemporal regional sensors, the deep learning model achieved classification performances that were similar to the whole-brain sensor model. The MEG-RSPnet model also distinguished neuronal features of individual hand gestures with very good accuracy. Altogether, these results show that noninvasive MEG-based BCI applications hold promise for future BCI developments in hand-gesture decoding.