2024-04-15 マサチューセッツ工科大学(MIT)

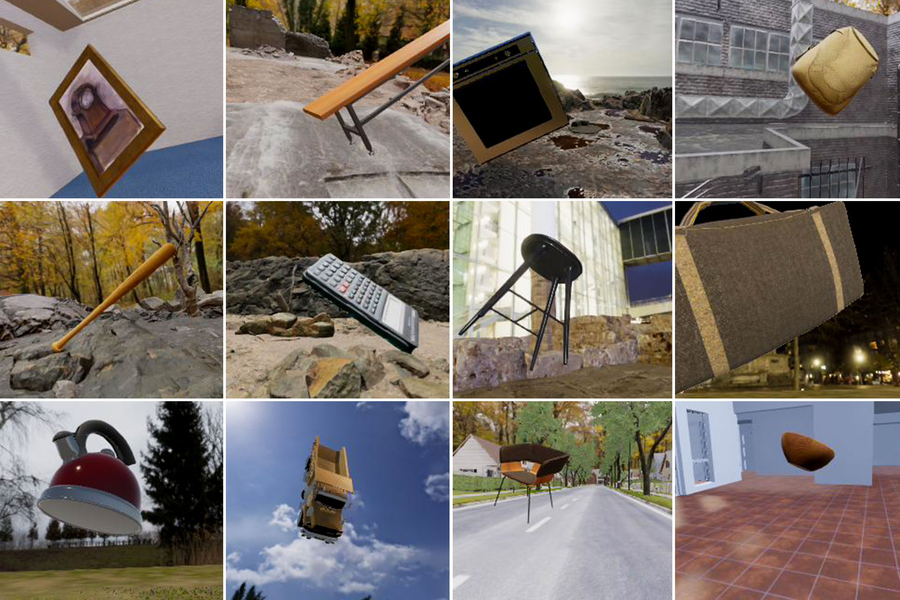

The models were trained on a dataset of synthetic images like the ones pictured, with objects such as tea kettles or calculators superimposed on different backgrounds. Researchers trained the model to identify one or more spatial features of an object, including rotation, location, and distance. Image: Courtesy of the researchers

<関連情報>

- https://news.mit.edu/2025/visual-pathway-brain-may-do-more-than-recognize-objects-0415

- https://openreview.net/forum?id=emMMa4q0qw

- https://www.nature.com/articles/nn.4247

空間潜在量を推定するように訓練された視覚CNNは、腹側ストリームに整列した類似の表現を学習した Vision CNNs trained to estimate spatial latents learned similar ventral-stream-aligned representations

Yudi Xie, Weichen Huang, Esther Alter, Jeremy Schwartz, Joshua B. Tenenbaum, James J. DiCarlo

Open Review Published: 23 Jan 2025

Abstract

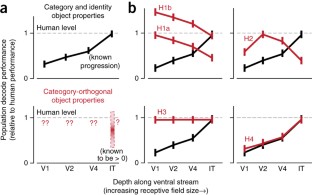

Studies of the functional role of the primate ventral visual stream have traditionally focused on object categorization, often ignoring — despite much prior evidence — its role in estimating “spatial” latents such as object position and pose. Most leading ventral stream models are derived by optimizing networks for object categorization, which seems to imply that the ventral stream is also derived under such an objective. Here, we explore an alternative hypothesis: Might the ventral stream be optimized for estimating spatial latents? And a closely related question: How different — if at all — are representations learned from spatial latent estimation compared to categorization? To ask these questions, we leveraged synthetic image datasets generated by a 3D graphic engine and trained convolutional neural networks (CNNs) to estimate different combinations of spatial and category latents. We found that models trained to estimate just a few spatial latents achieve neural alignment scores comparable to those trained on hundreds of categories, and the spatial latent performance of models strongly correlates with their neural alignment. Spatial latent and category-trained models have very similar — but not identical — internal representations, especially in their early and middle layers. We provide evidence that this convergence is partly driven by non-target latent variability in the training data, which facilitates the implicit learning of representations of those non-target latents. Taken together, these results suggest that many training objectives, such as spatial latents, can lead to similar models aligned neurally with the ventral stream. Thus, one should not assume that the ventral stream is optimized for object categorization only. As a field, we need to continue to sharpen our measures of comparing models to brains to better understand the functional roles of the ventral stream.

カテゴリーに直交する物体特性の明示的な情報は腹側ストリームに沿って増加する Explicit information for category-orthogonal object properties increases along the ventral stream

Ha Hong,Daniel L K Yamins,Najib J Majaj & James J DiCarlo

Nature Neuroscience Published22 February 2016

DOI:https://doi.org/10.1038/nn.4247

Abstract

Extensive research has revealed that the ventral visual stream hierarchically builds a robust representation for supporting visual object categorization tasks. We systematically explored the ability of multiple ventral visual areas to support a variety of ‘category-orthogonal’ object properties such as position, size and pose. For complex naturalistic stimuli, we found that the inferior temporal (IT) population encodes all measured category-orthogonal object properties, including those properties often considered to be low-level features (for example, position), more explicitly than earlier ventral stream areas. We also found that the IT population better predicts human performance patterns across properties. A hierarchical neural network model based on simple computational principles generates these same cross-area patterns of information. Taken together, our empirical results support the hypothesis that all behaviorally relevant object properties are extracted in concert up the ventral visual hierarchy, and our computational model explains how that hierarchy might be built.