2025-10-20 ニューヨーク大学(NYU)

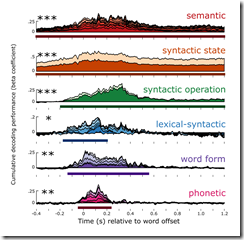

The figure shows how the brain works to decode the different aspects of words over time, with phonetics (i.e., sounds) processed most quickly and semantic meaning coming taking longer. Because people are hearing these words in the context of a story, the meanings of words can be anticipated, resulting in semantic meaning being processed earlier than phonetics. Image courtesy of Laura Gwilliams.

<関連情報>

- https://www.nyu.edu/about/news-publications/news/2025/october/when-is-the-brain-like-a-subway-station–when-it-s-processing-ma.html

- https://www.pnas.org/doi/10.1073/pnas.2422097122

階層的動的符号化が人間の脳内の音声理解を調整する Hierarchical dynamic coding coordinates speech comprehension in the human brain

Laura Gwilliams, Alec Marantz, David Poeppel, and Jean-Rémi King

Proceedings of the National Academy of Sciences Published:October 17, 2025

DOI:https://doi.org/10.1073/pnas.2422097122

Significance

To understand speech, the brain generates a hierarchy of neural representations, which map from sound to meaning. We recorded whole-brain activity while participants listened to audiobooks and modeled neural activity using time-resolved machine learning methods. Our analyses reveal that different neural patterns are activated over time to process each feature of the hierarchy, and abstract features have a slower spatial trajectory than sensory features. This spatiotemporally dynamic code enables a local history of inputs to be encoded in parallel, across a hierarchy from sound to meaning, also in parallel. This leads us to propose a dynamic model of cortical language processing: hierarchical dynamic coding (HDC).

Abstract

Speech comprehension involves transforming an acoustic waveform into meaning. To do so, the human brain generates a hierarchy of features that converts the sensory input into increasingly abstract language properties. However, little is known about how rapid incoming sequences of hierarchical features are continuously coordinated. Here, we propose that each language feature is supported by a dynamic neural code, which represents the sequence history of hierarchical features in parallel. To test this “hierarchical dynamic coding” (HDC) hypothesis, we use time-resolved decoding of brain activity to track the construction, maintenance, and update of a comprehensive hierarchy of language features spanning phonetic, word form, lexical–syntactic, syntactic, and semantic representations. For this, we recorded 21 native English participants with magnetoencephalography (MEG), while they listened to two hours of short stories in English. Our analyses reveal three main findings. First, the brain represents and simultaneously maintains a sequence of hierarchical features. Second, the duration of these representations depends on their level in the language hierarchy. Third, each representation is maintained by a dynamic neural code, which evolves at a speed commensurate with its corresponding linguistic level. This HDC preserves the maintenance of information over time while limiting destructive interference between successive features. Overall, HDC reveals how the human brain maintains and updates the continuously unfolding language hierarchy during natural speech comprehension, thereby anchoring linguistic theories to their biological implementations.