2025-05-20 ヒューストン大学(UH)

<関連情報>

- https://uh.edu/news-events/stories/2025/may/05202025-vannguyen-radiologist-gaze.php

- https://www.nature.com/articles/s41598-025-97935-y

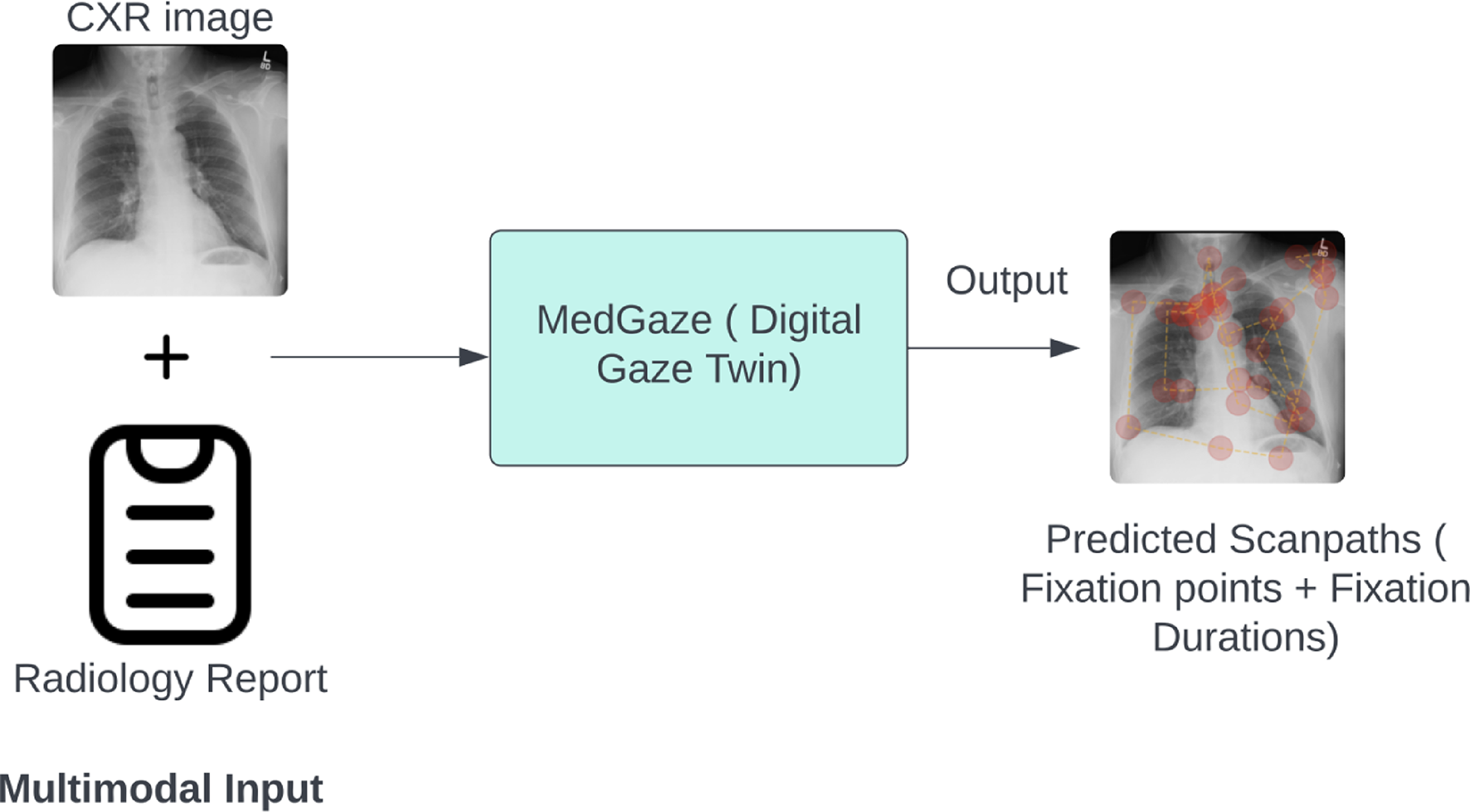

デジタル視線ツインを用いた放射線科医の認知プロセスのモデル化による放射線科トレーニングの強化 Modeling radiologists’ cognitive processes using a digital gaze twin to enhance radiology training

Akash Awasthi,Anh Mai Vu,Ngan Le,Zhigang Deng,Supratik Maulik,Rishi Agrawal,Carol C. Wu & Hien Van Nguyen

Scientific Reports Published:21 April 2025

DOI:https://doi.org/10.1038/s41598-025-97935-y

Abstract

Predicting human gaze behavior is critical for advancing interactive systems and improving diagnostic accuracy in medical imaging. We present MedGaze, a novel system inspired by the “Digital Gaze Twin” concept, which models radiologists’ cognitive processes and predicts scanpaths in chest X-ray (CXR) images. Using a two-stage training approach—Vision to Radiology Report Learning (VR2) and Vision-Language Cognition Learning (VLC)—MedGaze combines visual features with radiology reports, leveraging large datasets like MIMIC to replicate radiologists’ visual search patterns. MedGaze outperformed state-of-the-art methods on the EGD-CXR and REFLACX datasets, achieving IoU scores of 0.41 [95% CI 0.40, 0.42] vs. 0.27 [95% CI 0.26, 0.28], Correlation Coefficient (CC) of 0.50 [95% CI 0.48, 0.51] vs. 0.37 [95% CI 0.36, 0.41], and Multimatch scores of 0.80 [95% CI 0.79, 0.81] vs. 0.71 [95% CI 0.70, 0.71], with similar improvements on REFLACX. It also demonstrated its ability to assess clinical workload through fixation duration, showing a significant Spearman rank correlation of 0.65 (p < 0.001) with true clinical workload ranks on EGD-CXR. The human evaluation revealed that 13 out of 20 predicted scanpaths closely resembled expert patterns, with 18 out of 20 covering 60–80% of key regions. MedGaze’s ability to minimize redundancy and emulate expert gaze behavior enhances training and diagnostics, offering valuable insights into radiologist decision-making and improving clinical outcomes.