2022-04-28 ニューヨーク大学 (NYU)

・Nature Scientific Reports誌に掲載されたその研究は、がん検診における人的・技術的補完の可能性を示唆する分析の結果、医療診断を行う際に人間とAIの両方の手法を用いることの潜在的価値を明らかにするものです。

<関連情報>

- https://www.nyu.edu/about/news-publications/news/2022/april/radiologists–ai-systems-show-differences-in-breast-cancer-scree.html

- https://www.nature.com/articles/s41598-022-10526-z

医療診断における人間と機械の知覚の違い Differences between human and machine perception in medical diagnosis

Taro Makino,Stanisław Jastrzębski,Witold Oleszkiewicz,Celin Chacko,Robin Ehrenpreis,Naziya Samreen,Chloe Chhor,Eric Kim,Jiyon Lee,Kristine Pysarenko,Beatriu Reig,Hildegard Toth,Divya Awal,Linda Du,Alice Kim,James Park,Daniel K. Sodickson,Laura Heacock,Linda Moy,Kyunghyun Cho & Krzysztof J. Geras

Nature Scientific Reports Published: 27 April 2022

DOI:https://doi.org/10.1038/s41598-022-10526-z

Abstract

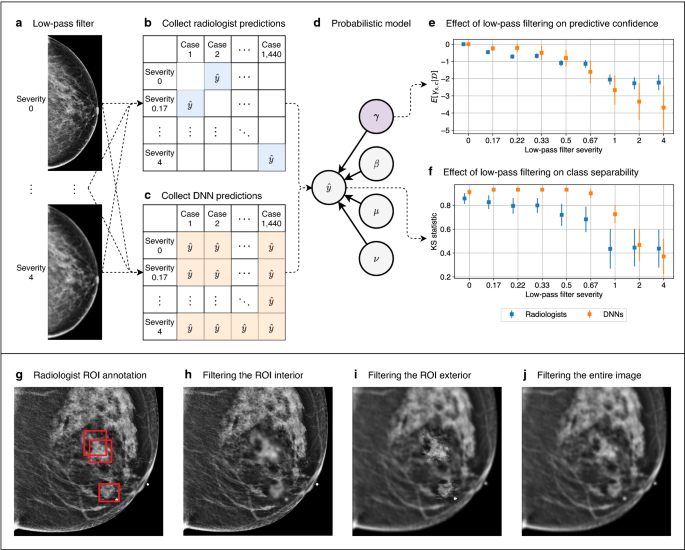

Deep neural networks (DNNs) show promise in image-based medical diagnosis, but cannot be fully trusted since they can fail for reasons unrelated to underlying pathology. Humans are less likely to make such superficial mistakes, since they use features that are grounded on medical science. It is therefore important to know whether DNNs use different features than humans. Towards this end, we propose a framework for comparing human and machine perception in medical diagnosis. We frame the comparison in terms of perturbation robustness, and mitigate Simpson’s paradox by performing a subgroup analysis. The framework is demonstrated with a case study in breast cancer screening, where we separately analyze microcalcifications and soft tissue lesions. While it is inconclusive whether humans and DNNs use different features to detect microcalcifications, we find that for soft tissue lesions, DNNs rely on high frequency components ignored by radiologists. Moreover, these features are located outside of the region of the images found most suspicious by radiologists. This difference between humans and machines was only visible through subgroup analysis, which highlights the importance of incorporating medical domain knowledge into the comparison.