2025-02-06 テキサス大学オースチン校(UT Austin)

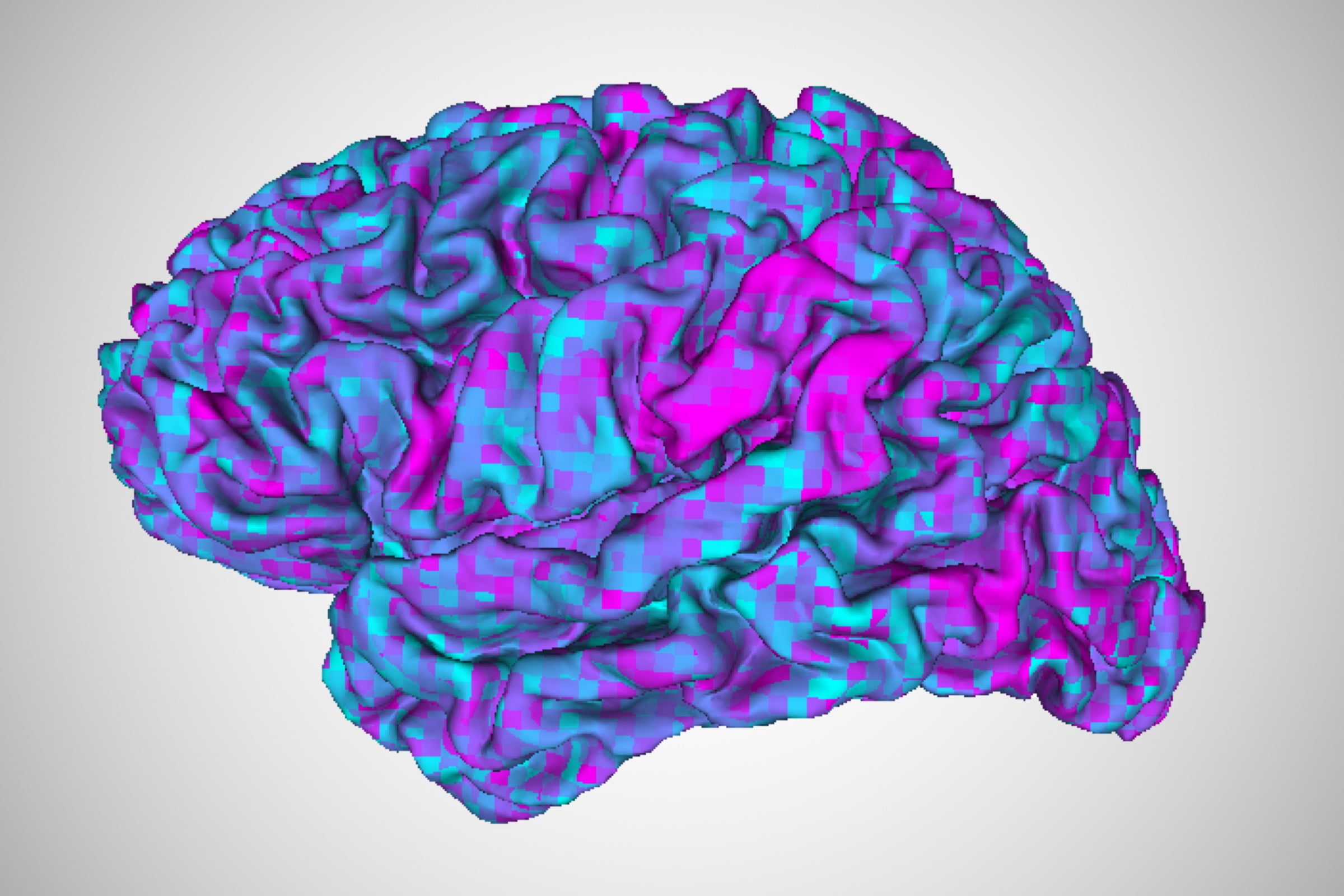

Brain activity like this, measured in an fMRI machine, can be used to train a brain decoder to decipher what a person is thinking about. In this latest study, UT Austin researchers have developed a method to adapt their brain decoder to new users far faster than the original training, even when the user has difficulty comprehending language. Credit: Jerry Tang/University of Texas at Austin.

<関連情報>

- https://news.utexas.edu/2025/02/06/improved-brain-decoder-holds-promise-for-communication-in-people-with-aphasia/

- https://www.sciencedirect.com/science/article/abs/pii/S0960982225000545?via%3Dihub

- https://medibio.tiisys.com/110420/

参加者と刺激様式を超えた意味的言語解読 Semantic language decoding across participants and stimulus modalities

Jerry Tang, Alexander G. Huth

Current Biology Published: February 6, 2025

DOI:https://doi.org/10.1016/j.cub.2025.01.024

Highlights

- Semantic decoders can be transferred across participants using functional alignment

- Semantic representations can be aligned using responses to either stories or movies

- Cross-participant semantic decoding is robust to simulated lesions

Summary

Brain decoders that reconstruct language from semantic representations have the potential to improve communication for people with impaired language production. However, training a semantic decoder for a participant currently requires many hours of brain responses to linguistic stimuli, and people with impaired language production often also have impaired language comprehension. In this study, we tested whether language can be decoded from a goal participant without using any linguistic training data from that participant. We trained semantic decoders on brain responses from separate reference participants and then used functional alignment to transfer the decoders to the goal participant. Cross-participant decoder predictions were semantically related to the stimulus words, even when functional alignment was performed using movies with no linguistic content. To assess how much semantic representations are shared between language and vision, we compared functional alignment accuracy using story and movie stimuli and found that performance was comparable in most cortical regions. Finally, we tested whether cross-participant decoders could be robust to lesions by excluding brain regions from the goal participant prior to functional alignment and found that cross-participant decoders do not depend on data from any single brain region. These results demonstrate that cross-participant decoding can reduce the amount of linguistic training data required from a goal participant and potentially enable language decoding from participants who struggle with both language production and language comprehension.