2025-03-31 カリフォルニア大学バークレー校 (UCB)

カリフォルニア大学バークレー校とサンフランシスコ校の研究チームは、重度の麻痺により言語能力を失った人々のために、リアルタイムで脳信号を音声に変換するニューラルプロテーゼを開発しました。

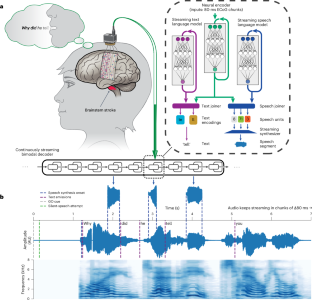

この技術は、脳の運動野からの神経データをサンプリングし、AIを活用して音声を合成します。従来の技術では、発話の試行から音声生成までに約8秒の遅延がありましたが、新しいストリーミング手法により、1秒以内での音声出力が可能となりました。

この進歩により、重度の麻痺患者がより自然な会話を行うことが可能となり、生活の質の向上が期待されます。研究者たちは、今後この技術をさらに発展させ、より多くの人々に恩恵をもたらすことを目指しています。

<関連情報>

- https://engineering.berkeley.edu/news/2025/03/brain-to-voice-neuroprosthesis-restores-naturalistic-speech/

- https://www.nature.com/articles/s41593-025-01905-6

- https://www.nature.com/articles/s41586-023-06443-4

自然なコミュニケーションを回復するストリーミング脳音声ニューロプロテーゼ A streaming brain-to-voice neuroprosthesis to restore naturalistic communication

Kaylo T. Littlejohn,Cheol Jun Cho,Jessie R. Liu,Alexander B. Silva,Bohan Yu,Vanessa R. Anderson,Cady M. Kurtz-Miott,Samantha Brosler,Anshul P. Kashyap,Irina P. Hallinan,Adit Shah,Adelyn Tu-Chan,Karunesh Ganguly,David A. Moses,Edward F. Chang & Gopala K. Anumanchipalli

Nature Neuroscience Published:31 March 2025

DOI:https://doi.org/10.1038/s41593-025-01905-6

Abstract

Natural spoken communication happens instantaneously. Speech delays longer than a few seconds can disrupt the natural flow of conversation. This makes it difficult for individuals with paralysis to participate in meaningful dialogue, potentially leading to feelings of isolation and frustration. Here we used high-density surface recordings of the speech sensorimotor cortex in a clinical trial participant with severe paralysis and anarthria to drive a continuously streaming naturalistic speech synthesizer. We designed and used deep learning recurrent neural network transducer models to achieve online large-vocabulary intelligible fluent speech synthesis personalized to the participant’s preinjury voice with neural decoding in 80-ms increments. Offline, the models demonstrated implicit speech detection capabilities and could continuously decode speech indefinitely, enabling uninterrupted use of the decoder and further increasing speed. Our framework also successfully generalized to other silent-speech interfaces, including single-unit recordings and electromyography. Our findings introduce a speech-neuroprosthetic paradigm to restore naturalistic spoken communication to people with paralysis.

音声解読とアバター制御のための高性能ニューロプロテーゼ A high-performance neuroprosthesis for speech decoding and avatar control

Sean L. Metzger,Kaylo T. Littlejohn,Alexander B. Silva,David A. Moses,Margaret P. Seaton,Ran Wang,Maximilian E. Dougherty,Jessie R. Liu,Peter Wu,Michael A. Berger,Inga Zhuravleva,Adelyn Tu-Chan,Karunesh Ganguly,Gopala K. Anumanchipalli & Edward F. Chang

Nature Published:23 August 2023

DOI:https://doi.org/10.1038/s41586-023-06443-4

Abstract

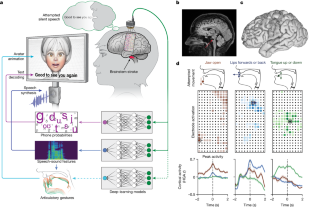

Speech neuroprostheses have the potential to restore communication to people living with paralysis, but naturalistic speed and expressivity are elusive1. Here we use high-density surface recordings of the speech cortex in a clinical-trial participant with severe limb and vocal paralysis to achieve high-performance real-time decoding across three complementary speech-related output modalities: text, speech audio and facial-avatar animation. We trained and evaluated deep-learning models using neural data collected as the participant attempted to silently speak sentences. For text, we demonstrate accurate and rapid large-vocabulary decoding with a median rate of 78 words per minute and median word error rate of 25%. For speech audio, we demonstrate intelligible and rapid speech synthesis and personalization to the participant’s pre-injury voice. For facial-avatar animation, we demonstrate the control of virtual orofacial movements for speech and non-speech communicative gestures. The decoders reached high performance with less than two weeks of training. Our findings introduce a multimodal speech-neuroprosthetic approach that has substantial promise to restore full, embodied communication to people living with severe paralysis.