2025-03-05 テキサス大学オースチン校 (UT Austin)

<関連情報>

- https://news.mccombs.utexas.edu/research/ai-can-open-up-beds-in-the-icu/

- https://pubsonline.informs.org/doi/abs/10.1287/isre.2023.0029

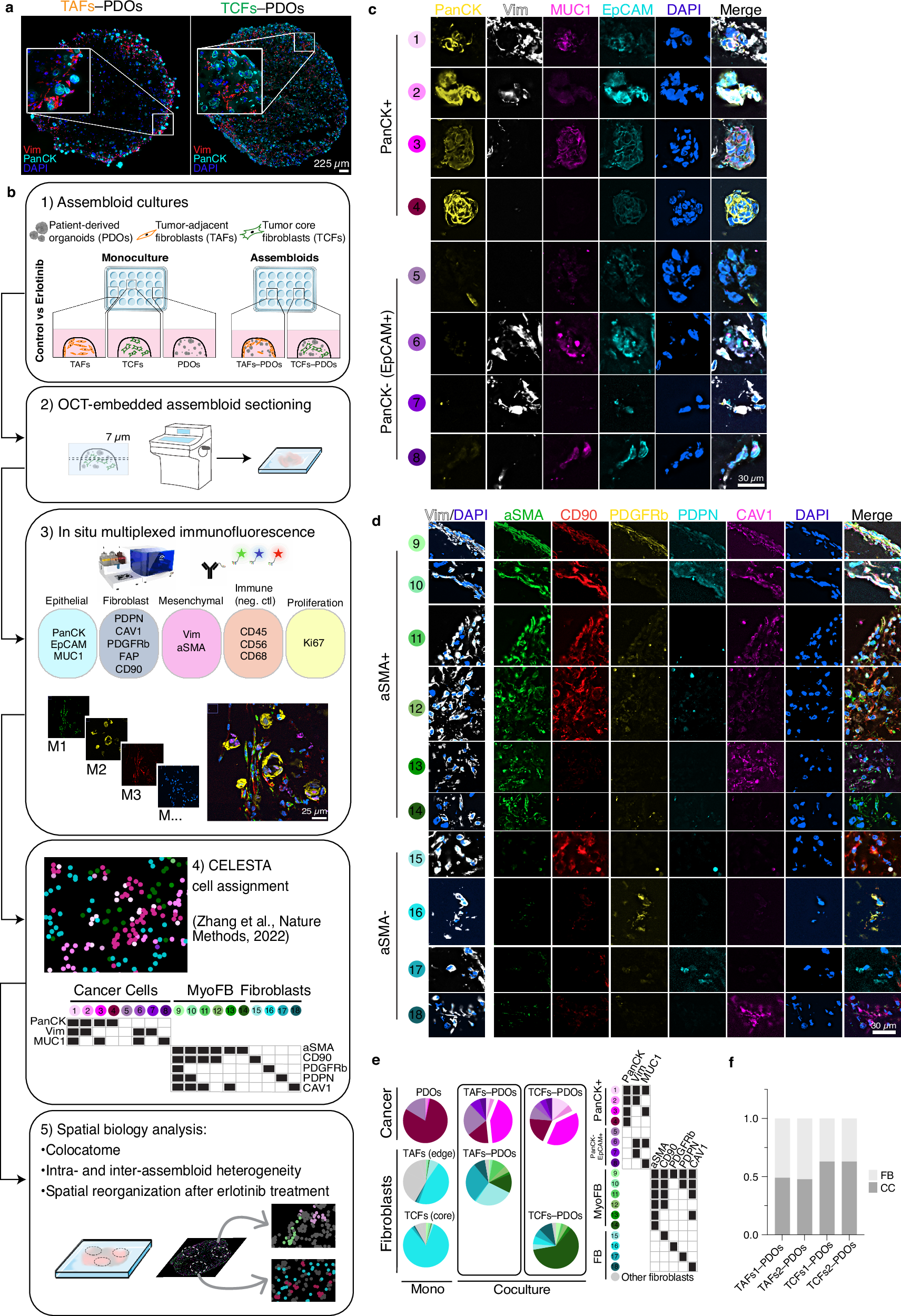

集中治療室の在院日数を予測するためのグラフ学習を用いた説明可能な人工知能アプローチ An Explainable Artificial Intelligence Approach Using Graph Learning to Predict Intensive Care Unit Length of Stay

Tianjian Guo ,Indranil R. Bardhan ,Ying Ding ,Shichang Zhang

Information Systems Research Published:11 Dec 2024

DOI:https://doi.org/10.1287/isre.2023.0029

Abstract

Intensive care units (ICUs) are critical for treating severe health conditions but represent significant hospital expenditures. Accurate prediction of ICU length of stay (LoS) can enhance hospital resource management, reduce readmissions, and improve patient care. In recent years, widespread adoption of electronic health records and advancements in artificial intelligence (AI) have facilitated accurate predictions of ICU LoS. However, there is a notable gap in the literature on explainable artificial intelligence (XAI) methods that identify interactions between model input features to predict patient health outcomes. This gap is especially noteworthy as the medical literature suggests that complex interactions between clinical features are likely to significantly impact patient health outcomes. We propose a novel graph learning-based approach that offers state-of-the-art prediction and greater interpretability for ICU LoS prediction. Specifically, our graph-based XAI model can generate interaction-based explanations supported by evidence-based medicine, which provide rich patient-level insights compared with existing XAI methods. We test the statistical significance of our XAI approach using a distance-based separation index and utilize perturbation analyses to examine the sensitivity of our model explanations to changes in input features. Finally, we validate the explanations of our graph learning model using the conceptual evaluation property (Co-12) framework and a small-scale user study of ICU clinicians. Our approach offers interpretable predictions of ICU LoS grounded in design science research, which can facilitate greater integration of AI-enabled decision support systems in clinical workflows, thereby enabling clinicians to derive greater value.