2023-04-20 カリフォルニア工科大学(Caltech)

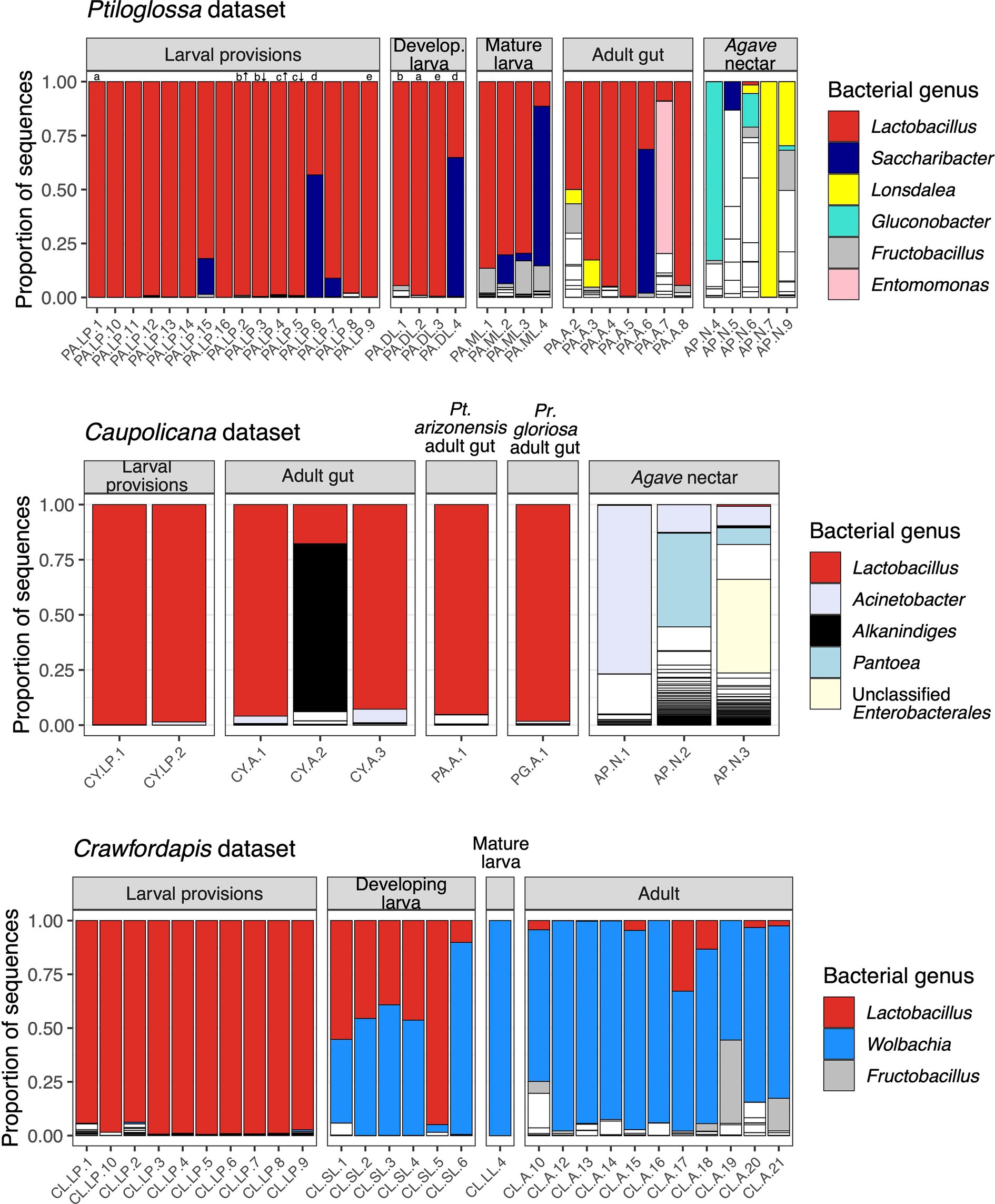

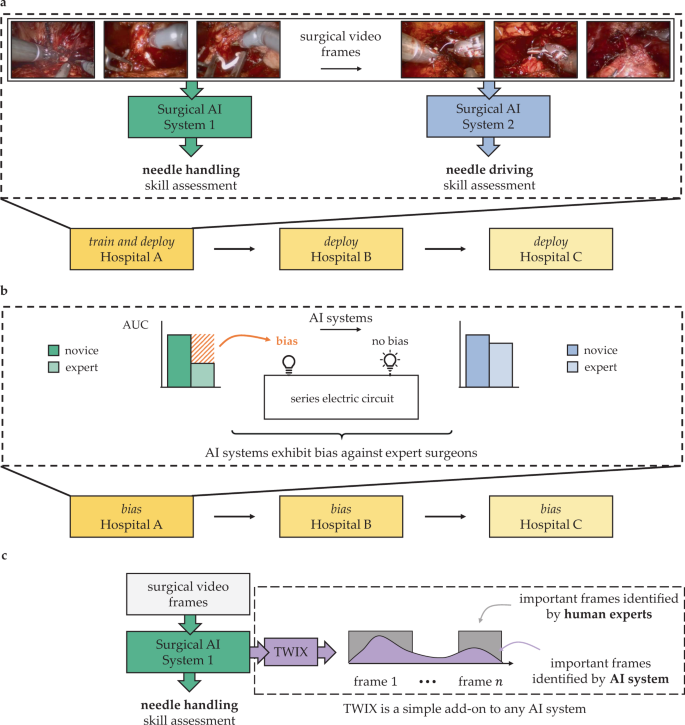

SAISは、手術のビデオを提供すると、どのような種類の手術が行われているか、そして外科医がその手術を実行する質を特定することができる。目的は、外科医に客観的なパフォーマンス評価を提供して、彼らの作業を改善し、患者のアウトカムを向上させることである。

SAISは、膨大なビデオデータでトレーニングされ、AIが手術ビデオをレビューし、外科医のパフォーマンスを評価するように指示された。

<関連情報>

- https://www.caltech.edu/about/news/ai-offers-tool-to-improve-surgeon-performance

- https://www.nature.com/articles/s41551-023-01010-8

- https://www.nature.com/articles/s41746-023-00766-2

- https://www.nature.com/articles/s43856-023-00263-3

手術映像から術者の活動をデコードするための視覚変換器 A vision transformer for decoding surgeon activity from surgical videos

Dani Kiyasseh,Runzhuo Ma,Taseen F. Haque,Brian J. Miles,Christian Wagner,Daniel A. Donoho,Animashree Anandkumar & Andrew J. Hung

Nature Biomedical Engineering Published:30 March 2023

DOI:https://doi.org/10.1038/s41551-023-01010-8

Abstract

The intraoperative activity of a surgeon has substantial impact on postoperative outcomes. However, for most surgical procedures, the details of intraoperative surgical actions, which can vary widely, are not well understood. Here we report a machine learning system leveraging a vision transformer and supervised contrastive learning for the decoding of elements of intraoperative surgical activity from videos commonly collected during robotic surgeries. The system accurately identified surgical steps, actions performed by the surgeon, the quality of these actions and the relative contribution of individual video frames to the decoding of the actions. Through extensive testing on data from three different hospitals located in two different continents, we show that the system generalizes across videos, surgeons, hospitals and surgical procedures, and that it can provide information on surgical gestures and skills from unannotated videos. Decoding intraoperative activity via accurate machine learning systems could be used to provide surgeons with feedback on their operating skills, and may allow for the identification of optimal surgical behaviour and for the study of relationships between intraoperative factors and postoperative outcomes.

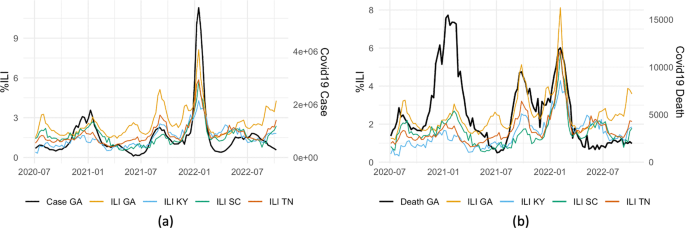

人間の視覚的説明により、AIによる外科医の技量評価の偏りを緩和する Human visual explanations mitigate bias in AI-based assessment of surgeon skills

Dani Kiyasseh,Jasper Laca,Taseen F. Haque,Maxwell Otiato,Brian J. Miles,Christian Wagner,Daniel A. Donoho,Quoc-Dien Trinh,Animashree Anandkumar & Andrew J. Hung

npj Digital Medicine Published:30 March 2023

DOI:https://doi.org/10.1038/s41746-023-00766-2

Abstract

Artificial intelligence (AI) systems can now reliably assess surgeon skills through videos of intraoperative surgical activity. With such systems informing future high-stakes decisions such as whether to credential surgeons and grant them the privilege to operate on patients, it is critical that they treat all surgeons fairly. However, it remains an open question whether surgical AI systems exhibit bias against surgeon sub-cohorts, and, if so, whether such bias can be mitigated. Here, we examine and mitigate the bias exhibited by a family of surgical AI systems—SAIS—deployed on videos of robotic surgeries from three geographically-diverse hospitals (USA and EU). We show that SAIS exhibits an underskilling bias, erroneously downgrading surgical performance, and an overskilling bias, erroneously upgrading surgical performance, at different rates across surgeon sub-cohorts. To mitigate such bias, we leverage a strategy —TWIX—which teaches an AI system to provide a visual explanation for its skill assessment that otherwise would have been provided by human experts. We show that whereas baseline strategies inconsistently mitigate algorithmic bias, TWIX can effectively mitigate the underskilling and overskilling bias while simultaneously improving the performance of these AI systems across hospitals. We discovered that these findings carry over to the training environment where we assess medical students’ skills today. Our study is a critical prerequisite to the eventual implementation of AI-augmented global surgeon credentialing programs, ensuring that all surgeons are treated fairly.

人工知能を用いて外科医に信頼性の高い公正なフィードバックを提供するための多施設共同研究 A multi-institutional study using artificial intelligence to provide reliable and fair feedback to surgeons

Dani Kiyasseh,Jasper Laca,Taseen F. Haque,Brian J. Miles,Christian Wagner,Daniel A. Donoho,Animashree Anandkumar & Andrew J. Hung

Communications Medicine Published:30 March 2023

DOI:https://doi.org/10.1038/s43856-023-00263-3

Abstract

Background

Surgeons who receive reliable feedback on their performance quickly master the skills necessary for surgery. Such performance-based feedback can be provided by a recently-developed artificial intelligence (AI) system that assesses a surgeon’s skills based on a surgical video while simultaneously highlighting aspects of the video most pertinent to the assessment. However, it remains an open question whether these highlights, or explanations, are equally reliable for all surgeons.

Methods

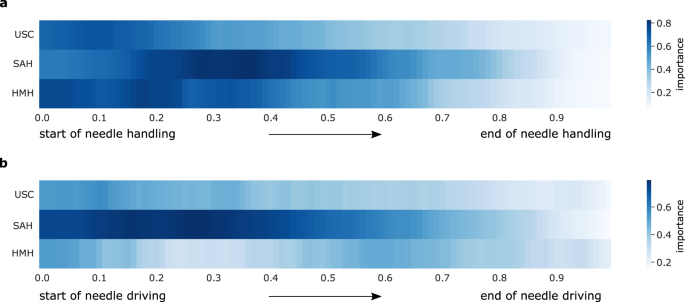

Here, we systematically quantify the reliability of AI-based explanations on surgical videos from three hospitals across two continents by comparing them to explanations generated by humans experts. To improve the reliability of AI-based explanations, we propose the strategy of training with explanations –TWIX –which uses human explanations as supervision to explicitly teach an AI system to highlight important video frames.

Results

We show that while AI-based explanations often align with human explanations, they are not equally reliable for different sub-cohorts of surgeons (e.g., novices vs. experts), a phenomenon we refer to as an explanation bias. We also show that TWIX enhances the reliability of AI-based explanations, mitigates the explanation bias, and improves the performance of AI systems across hospitals. These findings extend to a training environment where medical students can be provided with feedback today.

Conclusions

Our study informs the impending implementation of AI-augmented surgical training and surgeon credentialing programs, and contributes to the safe and fair democratization of surgery.

Plain language summary

Surgeons aim to master skills necessary for surgery. One such skill is suturing which involves connecting objects together through a series of stitches. Mastering these surgical skills can be improved by providing surgeons with feedback on the quality of their performance. However, such feedback is often absent from surgical practice. Although performance-based feedback can be provided, in theory, by recently-developed artificial intelligence (AI) systems that use a computational model to assess a surgeon’s skill, the reliability of this feedback remains unknown. Here, we compare AI-based feedback to that provided by human experts and demonstrate that they often overlap with one another. We also show that explicitly teaching an AI system to align with human feedback further improves the reliability of AI-based feedback on new videos of surgery. Our findings outline the potential of AI systems to support the training of surgeons by providing feedback that is reliable and focused on a particular skill, and guide programs that give surgeons qualifications by complementing skill assessments with explanations that increase the trustworthiness of such assessments.