2023-08-23 カリフォルニア大学バークレー校(UCB)

◆このデバイスは、脳信号をモジュレートされた音声と顔の表情に変換し、脳卒中で話せなくなった女性がデジタルアバターを通じて会話と感情の表現ができるようになりました。

◆この研究により、パラリーズや疾患のために話せない人々へのコミュニケーション手段が提供される可能性が示されました。この研究は、Nature誌に掲載されました。

◆UC BerkeleyのGopala Anumanchipalli准教授とPh.D.学生のKaylo Littlejohnは、この画期的な研究について説明し、脳-コンピュータインターフェースの開発と多様なコミュニケーション手段におけるAIの役割について話しました。

<関連情報>

- https://engineering.berkeley.edu/news/2023/08/novel-brain-implant-helps-paralyzed-woman-speak-using-a-digital-avatar/

- https://www.nature.com/articles/s41586-023-06337-5

- https://www.nature.com/articles/s41467-022-33611-3

- https://www.nejm.org/doi/full/10.1056/NEJMoa2027540

エネルギー効率の高い音声認識と書き起こしのためのアナログAIチップ An analog-AI chip for energy-efficient speech recognition and transcription

S. Ambrogio,P. Narayanan,A. Okazaki,A. Fasoli,C. Mackin,K. Hosokawa,A. Nomura,T. Yasuda,A. Chen,A. Friz,M. Ishii,J. Luquin,Y. Kohda,N. Saulnier,K. Brew,S. Choi,I. Ok,T. Philip,V. Chan,C. Silvestre,I. Ahsan,V. Narayanan,H. Tsai & G. W. Burr

Nature Published:23 August 2023

DOI:https://doi.org/10.1038/s41586-023-06337-5

Abstract

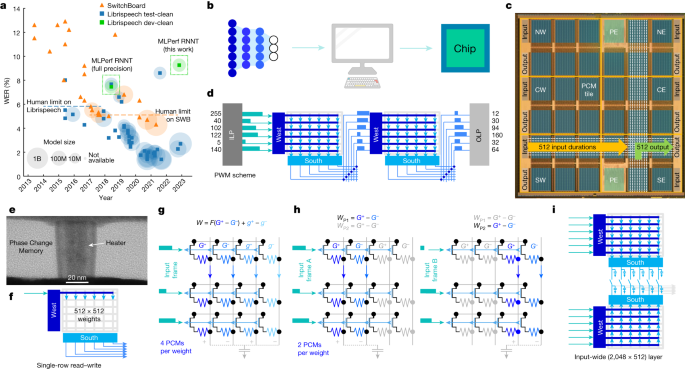

Models of artificial intelligence (AI) that have billions of parameters can achieve high accuracy across a range of tasks1,2, but they exacerbate the poor energy efficiency of conventional general-purpose processors, such as graphics processing units or central processing units. Analog in-memory computing (analog-AI)3,4,5,6,7 can provide better energy efficiency by performing matrix–vector multiplications in parallel on ‘memory tiles’. However, analog-AI has yet to demonstrate software-equivalent (SWeq) accuracy on models that require many such tiles and efficient communication of neural-network activations between the tiles. Here we present an analog-AI chip that combines 35 million phase-change memory devices across 34 tiles, massively parallel inter-tile communication and analog, low-power peripheral circuitry that can achieve up to 12.4 tera-operations per second per watt (TOPS/W) chip-sustained performance. We demonstrate fully end-to-end SWeq accuracy for a small keyword-spotting network and near-SWeq accuracy on the much larger MLPerf8 recurrent neural-network transducer (RNNT), with more than 45 million weights mapped onto more than 140 million phase-change memory devices across five chips.

重度の四肢および声帯麻痺患者における音声神経人工器官を用いた一般化可能な綴り方 Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis

Sean L. Metzger,Jessie R. Liu,David A. Moses,Maximilian E. Dougherty,Margaret P. Seaton,Kaylo T. Littlejohn,Josh Chartier,Gopala K. Anumanchipalli,Adelyn Tu-Chan,Karunesh Ganguly & Edward F. Chang

nature Published:08 November 2022

DOI:https://doi.org/10.1038/s41467-022-33611-3

Abstract

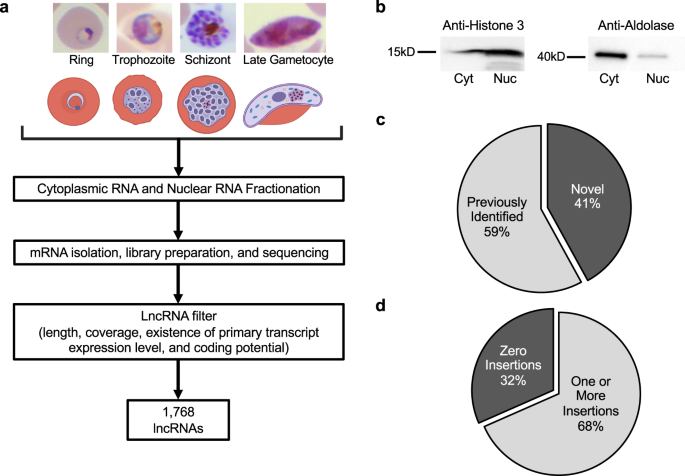

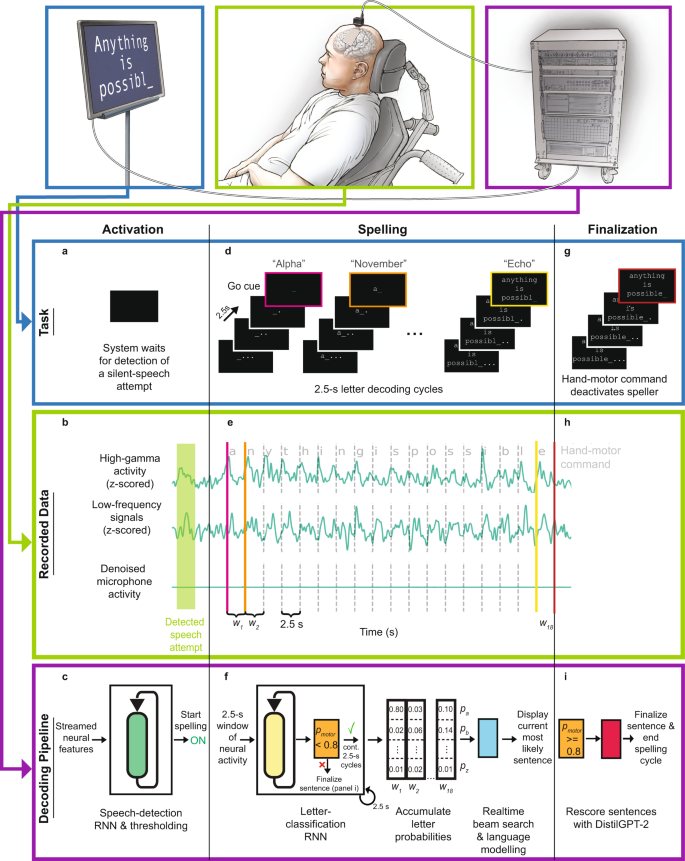

Neuroprostheses have the potential to restore communication to people who cannot speak or type due to paralysis. However, it is unclear if silent attempts to speak can be used to control a communication neuroprosthesis. Here, we translated direct cortical signals in a clinical-trial participant (ClinicalTrials.gov; NCT03698149) with severe limb and vocal-tract paralysis into single letters to spell out full sentences in real time. We used deep-learning and language-modeling techniques to decode letter sequences as the participant attempted to silently spell using code words that represented the 26 English letters (e.g. “alpha” for “a”). We leveraged broad electrode coverage beyond speech-motor cortex to include supplemental control signals from hand cortex and complementary information from low- and high-frequency signal components to improve decoding accuracy. We decoded sentences using words from a 1,152-word vocabulary at a median character error rate of 6.13% and speed of 29.4 characters per minute. In offline simulations, we showed that our approach generalized to large vocabularies containing over 9,000 words (median character error rate of 8.23%). These results illustrate the clinical viability of a silently controlled speech neuroprosthesis to generate sentences from a large vocabulary through a spelling-based approach, complementing previous demonstrations of direct full-word decoding.

無関節症の麻痺者の音声を解読するための人工神経 Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria

David A. Moses,Sean L. Metzger,Jessie R. Liu,Gopala K. Anumanchipalli,Joseph G. Makin,Pengfei F. Sun,Josh Chartier,Maximilian E. Dougherty,Patricia M. Liu,Gary M. Abrams,Adelyn Tu-Chan,Karunesh Ganguly,and Edward F. Chang

New England Journal Medicine Published:July 15, 2021

DOI: 10.1056/NEJMoa2027540

Abstract

BACKGROUND

Technology to restore the ability to communicate in paralyzed persons who cannot speak has the potential to improve autonomy and quality of life. An approach that decodes words and sentences directly from the cerebral cortical activity of such patients may represent an advancement over existing methods for assisted communication.

METHODS

We implanted a subdural, high-density, multielectrode array over the area of the sensorimotor cortex that controls speech in a person with anarthria (the loss of the ability to articulate speech) and spastic quadriparesis caused by a brain-stem stroke. Over the course of 48 sessions, we recorded 22 hours of cortical activity while the participant attempted to say individual words from a vocabulary set of 50 words. We used deep-learning algorithms to create computational models for the detection and classification of words from patterns in the recorded cortical activity. We applied these computational models, as well as a natural-language model that yielded next-word probabilities given the preceding words in a sequence, to decode full sentences as the participant attempted to say them.

RESULTS

We decoded sentences from the participant’s cortical activity in real time at a median rate of 15.2 words per minute, with a median word error rate of 25.6%. In post hoc analyses, we detected 98% of the attempts by the participant to produce individual words, and we classified words with 47.1% accuracy using cortical signals that were stable throughout the 81-week study period.

CONCLUSIONS

In a person with anarthria and spastic quadriparesis caused by a brain-stem stroke, words and sentences were decoded directly from cortical activity during attempted speech with the use of deep-learning models and a natural-language model. (Funded by Facebook and others; ClinicalTrials.gov number, NCT03698149. opens in new tab.)